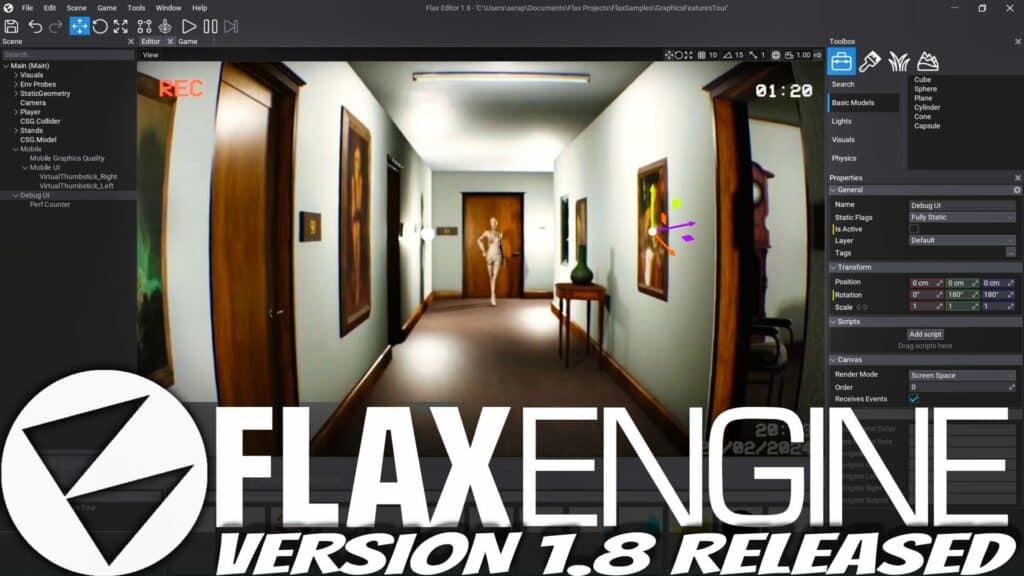

Flax Engine 1.8 Released

The Flax 3D game engine just released Flax Engine 1.8. This release adds several new features including .NET 8/ C#12 support, a new integrated UI building tool and much more. Details of the Flax Engine 1.8 release from the Flax blog: We’re happy to release Flax 1.8! It brings lots of great […]

Flax Engine 1.8 Released Read More