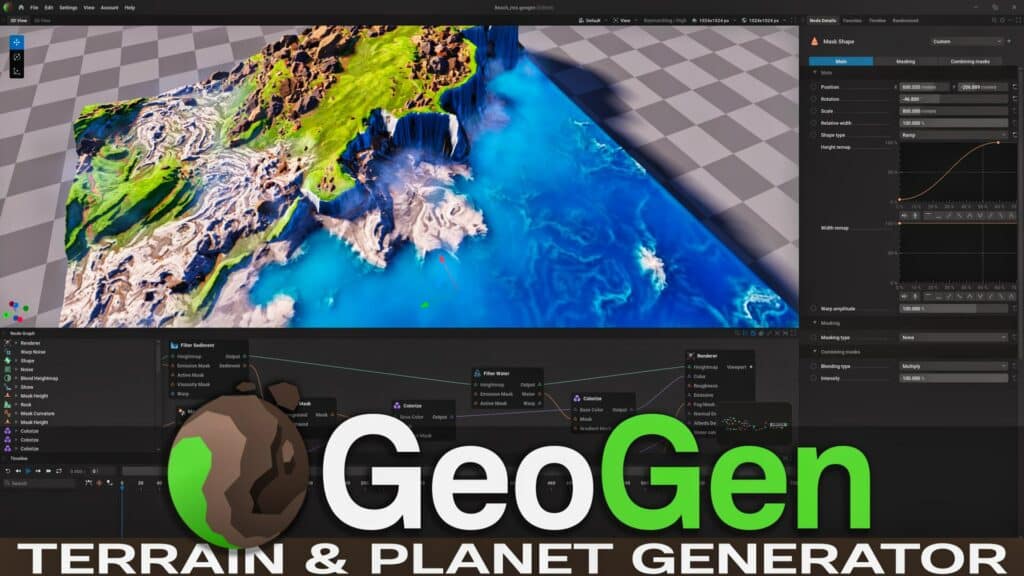

GeoGen – Procedural Terrain and Planet Generator

Today we are checking out GeoGen, an application for generating terrain/landscapes as well as planets procedurally, from JangaFX, the creators of EmberGen and the free Game Texture Viewer. GeoGen is currently still in alpha (although it is available for sale) and graph based workflow for creating landscapes and planets. GeoGen […]

GeoGen – Procedural Terrain and Planet Generator Read More