I finally decided to check out LibGDX, a very cool and popular Java based cross platform game development library. I’ve been meaning to check out LibGDX for ages, it’s the Java part that turned me off. It’s not the programming language, Java is a really good one, it’s the eco-system. Always it seems like when dealing with Java I deal with a whole slew of new headaches. Often it seems the cause of those headaches is Google. Today sadly was no exception. This post reflects not at all upon LibGDX, a long time ago I intended to run a series of PlayN tutorials, but I spent so much time supporting the build process or fragility of Eclipse that I simply gave up. Fortunately LibGDX ships with a setup tool that makes a bunch of these problems go away, but it’s certainly not solved the problems completely.

The first problem is partially on me, partially on Eclipse and a great deal on Java. I installed Eclipse as it’s the preferred environment for LibGDX and often trying to do things the non-Eclipse way can really make things more difficult, at least initially. ( I massively prefer IntelliJ to Eclipse ) Right away I fire up Eclipse and am greeted with:

failed to load the JNI shared library

If you are a veteran developer this is no doubt immediately obvious to you. I checked the most common things, first make sure JDK is installed. Make sure JAVA_HOME is set, make sure javac runs from the commandline, run javac –version to make sure things are working right. In the end it was a version mismatch. I downloaded the 64bit version of Eclipse and the 32bit version of the Java JDK. The obvious answer is to download the 64bit JDK but I really wouldn’t recommend that. Amazingly enough in 2013 the 64 bit version still causes problems. For example, FlashDevelop wont work with it. That is just one of a dozen applications I’ve seen that wont run with the 64bit version.

Not really a big deal. Eclipse should have done a heck of a lot better job with the error message. If it said “64bit JDK required” this would have taken about 10 seconds to solve. Keep in mind this is a problem that’s existed for 3 or 4 years, so don’t expect an improvement on the Eclipse side any time soon. Accessibility and polish has never really been a priority when it comes to Eclipse. The fact 64bit Java is still problematic, that’s 100% on Java though!

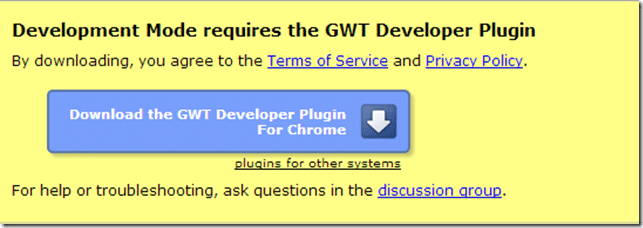

Anyways, other than fighting with Eclipse and Java, everything went wonderfully with LibGDX. I got my project made and configured without issue ( beyond oddly enough, the Setup UI wanting the original GDX install zip file, I had already deleted… oops ). Then when I went to run the HTML5 target in Chrome I get:

I click the Download the GWT Developer Plugin button.

Nothing. No error, no page load, nothing. I reload and try again.

Nothing.

Check my internet connection… all good.

Odd.

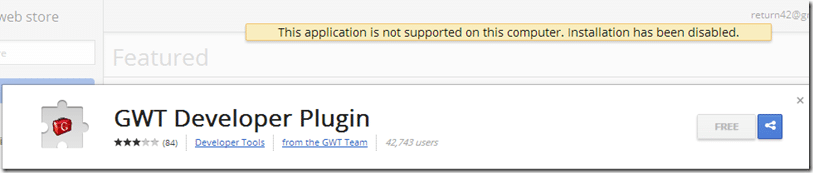

So then I grab the URL for that button and it loads a Chrome Store page ( at least it did something! )

This application is not supported on this computer. Installation has been disabled.

Lovely. If I got that message when I clicked the initial link, things would have made a great deal more case. Want to know why I hate Google for this one?

First off… it works in Internet Explorer and Firefox. It’s only in Chrome that the GWT developer plugin doesn’t work ( on Windows 8 as it turns out ). But that’s not the hatred part.

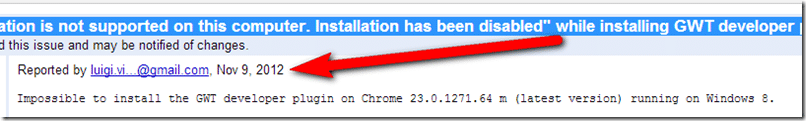

Take a look at GWT issue 7778.

"This application is not supported on this computer. Installation has been disabled" while installing GWT developer plugin on Chrome

So, its a known issue of Google’s developer tool working in Google’s browser on Windows 8. But the hatred part…

November 9th, 2012. It’s been a known and reported problem for almost a year. Fixed? God no!

Welcome to working in Google tools. You will spend a stupid amount of time trying to debug what the problem is, only to find yourself looking at year(s) old tickets that have been ignored by Google. When working in Android I ran into common tickets with hundred of comments that were years old on nearly a daily basis.

This isn’t a one off rant, this kind of stuff happens EVERY SINGLE TIME I work with Google technology. I have literally never once NOT run into a problem, from Android, to DART, to NaCL to GWT. Every single time.

It’s not such a big deal for me, I’ve got the experience enough when working with Google tools to expect minimal or wrong errors when problems occurred and for half assed support when I research the problem. Frankly I just stay the hell away when I can. Where its a gigantic deal breaker is when a new developer runs into these additional hassles. In some ways it’s kind of infuriating as great libraries like LibGDX become that much harder for developers to access.