So last week I decided to run a poll to see which gaming technology people would be most interested in and the results actually shocked me:

Haxe narrowly edged out LibGDX ( by two votes ), while my original plan of HTML5 came in a distant third. The other category seemed to be mostly composed of people interested in MonoGame.

I have long been a fan of C# and XNA, so Monogame was an obvious option. It was ( and is ) discounted for a couple reasons. First is the inability to target the web. It’s a shame Microsoft put a bullet in Silverlight, as otherwise this limitation wouldn’t exist. Second, paying 300$ to deploy on iOS and another 300$ to deploy on Android is a bit steep. LibGDX suffers this to a degree too, as you need Xamarin to target iOS. Hopefully in time this changes.

Hello Haxe World

So over the last couple days I’ve taken a closer look at Haxe or more specifically Haxe + NME. Haxe as a programming language is an interesting choice. It has an ActionScript like syntax and compiles to it’s own virtual machine like Java ( the VM is called Neko, so if you are wondering what Neko is… well, now you know!) If that was all Haxe did, we wouldn’t be having this conversation. More interestingly, it compiles to a number of other programming languages including HTML5 ( JavaScript ), ActionScript ( Flash ) and C++. As a result, you can compile to C++ for Android and iOS and get native performance. This makes Haxe quite often many times faster than ActionScript.

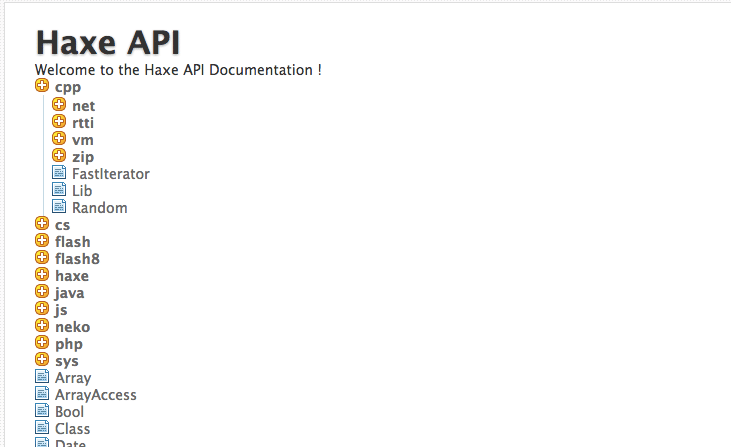

There is however a serious problem or flaw with Haxe. Let’s take a quick look at part of the Haxe API and you will see what I mean:

Notice how there are a number of language specific apis, such as cpp, which I’ve expanded above. So, what happens if you use the cpp Random api and want to target Flash? Short answer is, you can’t. If course you could right a great deal of code full of if X platform, do this, otherwise do that, but you will quickly find yourself writing the same application for each platform. So, what do you do if you want to write for multiple platforms with a single code base?

Meet NME

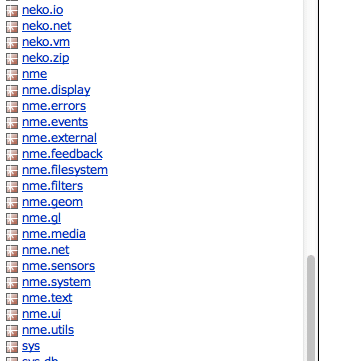

This is where NME comes in. NME builds on top of the Haxe programming language and provides a Flash like API over top. Most importantly, it supports Windows, Mac, Linux, iOS, Android, BlackBerry, webOS, Flash and HTML5 using a single code base. Let’s take a quick look at part of the NME libraries to show you what I mean:

There are still platform/language specific libraries, like the neko.vm above. There are also the nme.* libraries. These provide a Flash like API for the majority of programming tasks needed to make a game. Targeting your code towards these libraries, and minimizing the use of native libraries, and you can target a number of different platforms with a single code base.

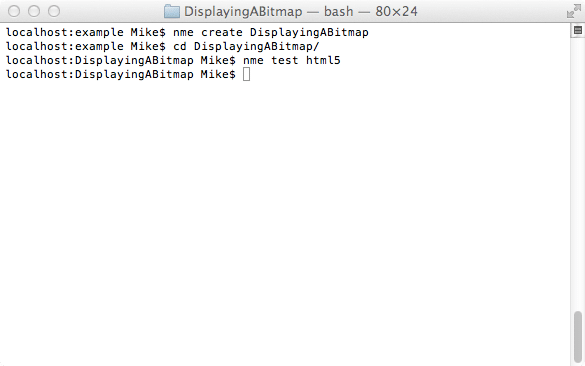

There is another aspect to NME that makes life nice. If you read my review of the Loom Game Engine you may recall I was a big fan of the command line interface. NME has a very similar interface. Once you’ve installed it, creating a new project from one of the samples is as simple typing nme create samplename then you can test it by running nme test platform.

Here is an example of creating the DisplayingABitmap sample and running it as an HTML5 project:

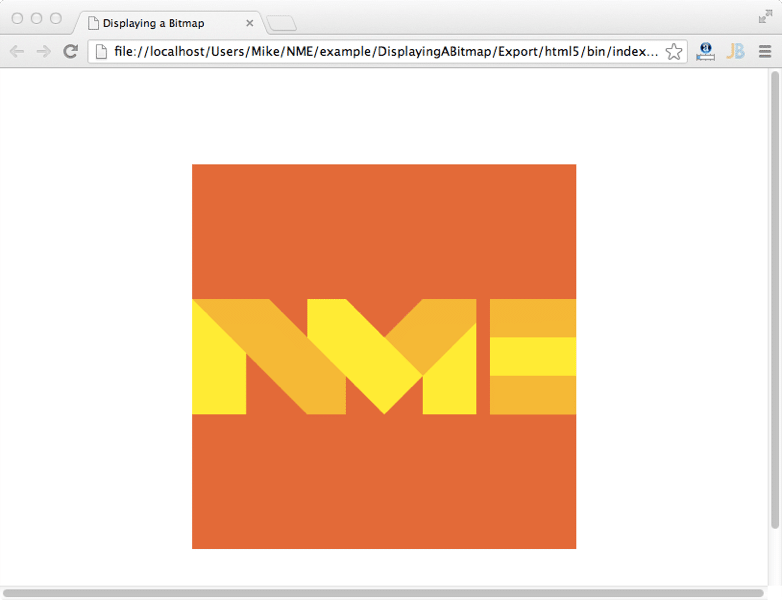

And your browser will open:

What makes this most impressive is when you target C++, it fires off the build for you using the included tool chain. Configuring each platform is as simple as nme setup platformname, valid platforms are currently windows, linux, android, blackberry and webos. Unfortunately Xcode can’t be installed automatically, so this process won’t work for iOS or Mac, you need to setup Xcode yourself. As you can see, setting up and running NME/Haxe is quite simple, and worked perfectly for me out of the box. If you are curious what options you can provide for sample names, you can get them from the Github directory. There are a fair number of samples to start with.

Now is when things take a bit of a turn for the worse… getting an IDE up and running. This part was a great deal less fun for me. You can get basic syntax highlighting and autocompletion working on a number of different IDEs, here is the list of options. This is when things got rather nasty. I was working on MacOS so this presented a bit of a catch. I tried getting IntelliJ to work. Adding the plugin is trivial ,although for some reason you need to use the zip version, it’s not in the plugin directory. Configuring the debugger though… that’s another story. I spent a couple hours googling, and sadly only found information a year or two old.

Then I tried MonoDevelop, there is a plugin available and its supposed to be a great option on MacOS. And…. MonoDevelop 3 is no longer available… It’s now Xamarin Studio 4 and the plugin doesn’t work anymore. Good luck getting a download for MonoDevelop 3! There is also FDT, which I intend to check out, but it’s built on top of Eclipse and I HATE Eclipse. Eventually I got IntelliJ Flash debugging to work but it was a great deal less fun.

After this frustrating experience, I rebooted into Windows and things got a TON better. FlashDevelop is easily the best option for developing with Haxe but sadly it’s only available on Windows. There was however a major catch… debugging simply did not work. After some digging, it turns out you have to run the 32bit JDK or it doesn’t work. Seriously, in this day and age, still having Java VM problems like this is just insane. Once I got that licked, I was up and running.

At this point I have a working development environment up and running I can get to coding. If you are working on Windows, using FlashDevelop you can get up and running very easily, so long as you are running 32bit Java. On MacOS though, expect a much rockier experience. It would be great if FlashDevelop could be ported to Mac, but apparently it can’t be… there have been a number of attempts. They have however provided a configuration for working in Virtualized settings ( VMWare, Parallels, etc ).

Stay tuned for some code related posts soon.