In celebration of their 40th anniversary, Atari has re-released a number of their classic games as HTML5 in their newly launched web arcade. Each of the titles has received a facelift, and the list includes:

- Asteroids

- Centipede

- Combat

- Lunar Lander

- Missile Command

- Pong

- Super Breakout

- Yar’s Revenge

As you can see, the games have received a facelift:

Asteroids:

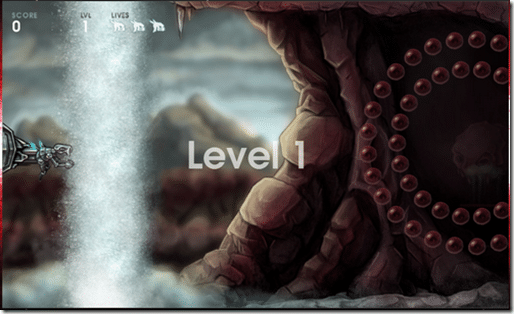

Yar’s Revenge:

The project is a team up between Atari, CreateJS and Microsoft. The Microsoft connection is Internet Explorer 10, which allows you to view the arcade ad free. Atari is releasing an SDK for publishing on their arcade, the download and documentation page is currently down, so details are a bit sparse right now. Their quick start pdf is currently available and gives a glimpse into the process. Presumably the arcade would work on a revenue sharing scheme, but that is just guesswork at the moment.

The library used to create all the games is called CreateJS, and is a bundling of HTML5 libraries including:

EaselJS – a HTML5 Canvas library with a Flash like API

TweenJS – a chainable tweening library

SoundJS – a HTML5 audio library

PreLoadJS – an asset loading and caching library

Plus the newly added tool, Zoe. Zoe is a tool that takes SWF Flash animations and generates sprite sheets.

I look forward to looking in to Atari’s new API once their documentation page is back online. Atari has also created a GitHub repository to support the project, but it is currently a little sparse. In their own words:

Welcome to the Atari Arcade SDK. This is the initial release of the SDK, which we hope to evolve over the next few weeks, adding * more documentation * examples * updates This repository contains * Atari Arcade SDK classes in scripts/libs * scripts necessary to run the SDK locally, in scripts/min * API documentation and a quick start guide in docs/ * A test harness page to bootstrap and launch games

All told, a pretty cool project. At the very least, check out the arcade, it’s a great deal of fun.

General